Christmas Eve. The 76-year-old Johan is having a family dinner with his children and grandchildren. Although he is very happy that everyone is together, he feels lonely.

Johan suffers from hearing loss. He wears a hearing aid that amplifies sounds. Unfortunately, his hearing aid doesn’t know which person he wants to listen to, and the ensuing auditory chaos causes Johan to switch off his hearing aid.

Since he’s unable to follow any distinct conversations, his experience though in a room full of family is one of isolation. This is what author Helen Keller meant when she said that “blindness cuts us off from things, while deafness cuts us off from people”.

Cocktail party chaos

Like Johan, 1 in 10 Belgians suffer from hearing loss. According to the World Health Organization, this number will increase in the coming decades partly due to the aging population, reaching 1 in 4 by 2050. It is therefore of paramount importance to the wellbeing of millions that we develop smart hearing aids that are able to listen in a targeted manner.

Unfortunately, current hearing aids do not work well in cocktail-party situations when several people are speaking at the same time. At receptions, parties, and dinner tables, the hearing aid simply amplifies all speakers equally, preventing the user from following any conversation.

Helping Johan to eavesdrop

A possible solution to Johan’s problem is to simply amplify the speaker closest to or the person he’s looking at. But what if Johan wants to eavesdrop on what his partner at the other end of the table is saying about him (something he hasn’t been able to do for years)?

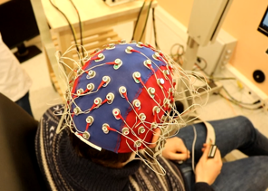

To achieve this, we would have to obtain information about the conversation Johan would like to focus on directly from his brain. This seemingly impossible feat is precisely what our interdisciplinary team at KU Leuven is working on. Measuring the electrical activity of the brain via an electroencephalogram (EEG), using sensors placed on the skull, we can use these data to find out who Johan wants to listen to.

Our team is designing algorithms to read the EEG and determine what a person wants to focus on. For example, we use artificial intelligence to reconstruct features of the speech signal that a user is paying attention to. By matching those reconstructed features with all the speech signals that the hearing aid picks up, we can identify the correct speaker. The hearing aid can then suppress all other conversations and amplify the right conversation.

Where’s that sound coming from?

Problem solved? Not quite. The EEG can be compared to a blurry video: the relevant brain activity is buried under all kinds of other activity, with countless processes going on at any given moment. To find out what is happening on that blurry video, we need enough footage – at least 30 seconds. Unfortunately, that’s too slow for Johan, who’ll miss what his partner is saying right this minute.

To overcome this delay, we have developed a new technique that, based on the brain patterns, determines the spatial direction of Johan’s attention. Thanks to colleagues at Columbia University in New York, we know that different brain processes are active when you listen to the left or right. Using AI to tease out these specific brain patterns, we can now determine which direction someone is listening to in less than 2 seconds. The hearing aid can then amplify the speaker at that specific location.[/vc_column_text][/vc_column][/vc_row][vc_row][vc_column width=”1/1″][vc_single_image media=”87006″ media_width_percent=”50″ alignment=”center”][vc_column_text]

Video: This demo shows how we can control a hearing aid by using the brain pattern to determine which direction in the room the user is paying attention to.

This new technology is very promising due to its high speed, but it also raises many questions. For example, it is currently unclear exactly which brain processes are active when we listen to a specific direction. The answers to these questions will have a significant impact on the practical applicability of this innovative technique.

There’s work to be done

There is still a lot of work to be done before this promising technology becomes a reality. Among other things, measuring the EEG with wearable sensors remains a challenge. We are currently testing this technology in different scenarios and environments, and experiment with self-learning algorithms that automatically adapt to new situations.

In overcoming these hurdles, we hope to design a brain-controlled hearing aid to help improve the lives of people like Johan – to help people with hearing aids rediscover the joys Christmas parties, and tune in to the conversations of their loved ones with ease.

Further reading:

• S. Geirnaert et al., “Electroencephalography-Based Auditory Attention Decoding: Toward Neurosteered Hearing Devices,” in IEEE Signal Processing Magazine, vol. 38, no. 4, pp. 89-102, July 2021.

• S. Geirnaert, T. Francart and A. Bertrand, “Fast EEG-Based Decoding Of The Directional Focus Of Auditory Attention Using Common Spatial Patterns,” in IEEE Transactions on Biomedical Engineering, vol. 68, no. 5, pp. 1557-1568, May 2021.

• J. O’Sullivan et al., “Attentional Selection in a Cocktail Party Environment Can Be Decoded from Single-Trial EEG,” in Cerebral Cortex, vol. 25, no. 7, pp. 1697-1706, July 2015.

• KU Leuven Auditory attention decoding team (ESAT-STADIUS and Dept. of Neurosciences, ExpORL): Alexander Bertrand, Tom Francart, Simon Geirnaert, Nicolas Heintz, Iustina Rotaru, Iris Van de Ryck[/vc_column_text][/vc_column][/vc_row]